The essence of what this boils down to is this:

1) It's not a 4th amendment case. 4th amendment covers

unreasonable searches and seizures. Since a warrant was issued, and the owner of the phone has consented, it's not unreasonable.

2) It MAY be a First or Fifth amendment case. Can the government compel disclosure of an encryption key or security code? Maybe. If the individual were still alive, it would be arguable that he could not be forced to disclose the key. Apple? Maybe or maybe not. Software is typically covered by the First amendment, which would be the argument against forcing someone to write specific new software. But AFAIK, there's not much case law in this area.

3) Is part of this a marketing statement by Apple? Yes.

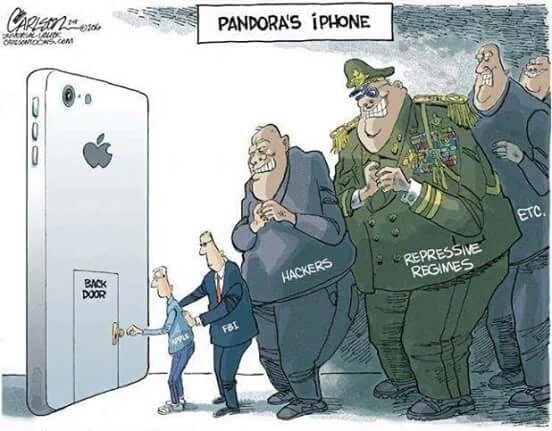

4) Does this open a security hole for the product or put Apple in a pretty difficult position dealing with various governments? Yes. The mere fact of disclosing that the units can be cracked is enough to encourage hackers and/or foreign governments. It could encourage bans of the equipment in other countries unless the crack method is disclosed. And it certainly sets a precedent in the US of requiring disclosure for whatever reason the secret national security court decides, even if the justification is weak. Do you trust your government, hackers, and other governments?

5) Is the FBI pushing this because they can't get legislation through Congress. No doubt about it. This is truly creating new law via the justice system. It sets a troubling precedent.

6) Could the problem have been avoided? In this case, yes. If the county had installed and properly managed MDM software, this likely would not have happened. And the reset of the iCloud password was really not smart.

7) Will the prevent smart terrorists in the future? Not a chance. With packages like Cyanagenmod on Android, one can install an OS that might contain encryption & wipe features without anyone who can break it. TrueCrypt used to have that feature for hard drives on desk/laptops - although TrueCrypt has been withdrawn (under mysterious circumstances), it is certainly feasible.

8) Whether or not Apple can crack this is not certain at this point. The "fail counter" would probably be before the encrypted code, and therefore might be vulnerable, but if the user employed a strong passphrase it might still not unlock.

9) If Apple designs this and it doesn't work (the memory gets trashed), who will be held responsible. I wouldn't want to touch the coding on this one without clear indemnification.

The case is not simple, but the key to this is the Government wanting to set precedent to get around the legislative process, and Apple wanting to protect it's reputation for both liability and security reasons....

this cartoon from Toles sums it up (at least as far as Google and MS are concerned, and to a lesser degree Apple):

http://www.gocomics.com/tomtoles/2016/02/21